The hype about the dangers of Artificial Intelligence has started again, but this time I agree, keep A.I away from warfare!

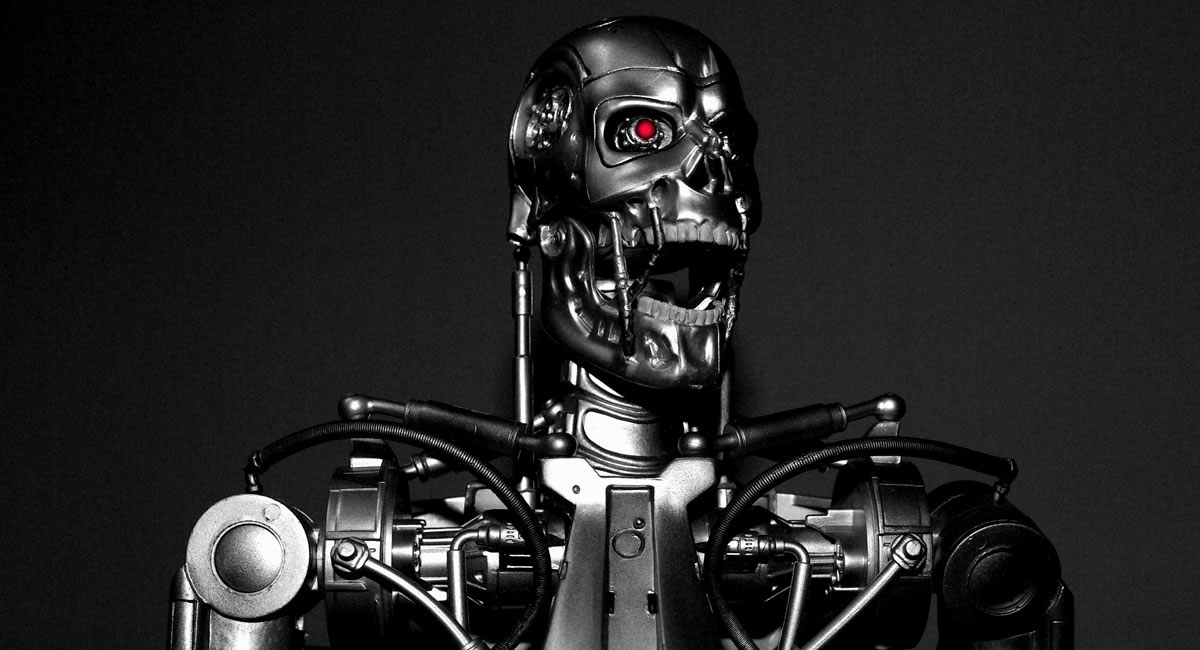

Adam Milton-Barker | Jul 28, 2015 | Artificial Intelligence | 4342 When I first began to take an interest in Artificial Intelligence I was a lot more naive about A.I, and strongly believed that Artificial Intelligence would never pose a threat towards humanity. At the time it angered me (and still does) that the media was/is hell bent on making people think that the A.I apocalypse is just around the corner. Even if the problem did exist in the future, I believed that making the world hysterical about A.I was not going stop the possible dangers happening, but simply cause confusion and fear, creating a world that rejected A.I before it had even had a chance to show us its potential. The common story that has been blasted around by the media is along the lines of A.I becoming self aware, realizing that humans are either unwanted/a threat to A.I or a threat to the planet, and then ultimately, A.I wiping out the human race. I have never believed in this scenario, commonly known as the Terminator Scenario, I have always believed that A.I would do nothing but enhance our race, now I still believe this, but I do believe that in the wrong hands A.I could also very easily cause a lot of destruction. With a little more experience behind me and a lot of thinking and research, it became clear to me that my views on how A.I will affect the world are based on my involvement with people in my own circles, and that there was a lot more possibilities out there that could lead us to the Terminator scenario. All of the people that I know that are involved in A.I development have always been involved with the aim of using the technology to better not only their own lives, but other peoples lives. All of the tech companies that I follow that are increasing the rate of A.I's evolution immensely, are also involved to help make the world a better place. The truth is though, as with all technologies, humanity is the weakest link. What I mean by this is that no matter how much good the technology has been doing, and how much good it will do, all of that could be completely destroyed by just one person programming an A.I specifically to cause destruction or the technology falling into the wrong hands, we could very easily wake up to a scene straight of the Terminator one day. With many A.I technologies being open sourced on the internet I believed this actually could be a threat if not monitored and regulated properly, the problem is who should regulate it? Elon Musk and Stephen Hawking are two people that have been very vocal about the dangers of A.I, ironically Musk is directly involved in the development of A.I. In 2014 Musk, Mark Zuckerberg and Ashton Kutcher invested $40,000,000 in a company known as Vicarious, a company whose primary target is to create an artificial neocortex, the part of our brain that controls our vision, movement and helps us to understand languages. I was very skeptical about Musks intentions, as you saw a lot about his fears of A.I taking over, but the general public didn't seem to be being informed that Musk was involved in the development and research towards strong A.I. I deeply respect Elon for the work that he does and look up to him a lot for his involvement in using technology to help people and advance our race, but deep down I have always had mix feelings and questions about his warnings. It has always been a strong belief of mine that Artificial Intelligence should be kept well away from any government/military projects, and that in the interest of humanity, A.I should not be allowed to take part in warfare. Again we get back to my initial feelings that A.I won't be a threat on its own accord, but develop an A.I purpose built for war and throw in human interaction and our need to destroy each other, and I believe you are asking for trouble. More recently the hype has sparked up again online, but this time I feel there is something for me to write about and share my views on. Elon Musk, Stephen Hawking, Demis Hassabis, Steve Wozniak and 1000's of others have recently signed a letter that will be presented at the International Joint Conference on Artificial Intelligence in Buenos Aires this Wednesday. With the letter they hope to ban Artificial Intelligence Warfare and autonomous weapons. This is most definitely one of the best ideas I have heard in a long time regarding A.I and I believe that is imperative that this happens. With A.I banned from warfare it could avoid a catastrophic turn of events that may well lead us into a real life Terminator scenario. It will not 100% stop any threats, but will eliminate a large chunk of the dangers leaving us only with the problem of how to regulate A.I to tackle. One thing that the last few years has shown with technology as a whole is that tech companies are starting to get each others backs with matters that concern the well being and safety of the population, and I am glad to see that once again it is happening with something so important as this. Artificial Intelligence is here to change our lives and help us evolve, if the letter is successful it think it will help to put the general public back on track to accepting A.I into their lives and put a lot of worries to rest, for now anyway.